Raster Vision

<- Return to all blogs

-

Introducing: Raster Vision v0.20

We outline Raster Vision V0.20, introducing new features, improved documentation, and an entirely new way to use the project.

-

Automated Building Footprint Extraction (Part 2): Evaluation Metrics

In the second part of our Automated Building Footprint Extraction series, we review some evaluation metrics for building footprint extraction.

-

A Human-in-the-Loop Machine Learning Workflow for Geospatial Data

In this blog we demonstrate how an active learning approach can boost machine learning model performance with the human-in-the-loop workflow.

-

Change detection with Raster Vision

This blog explores the direct classification approach to change detection using our open-source geospatial deep learning framework, Raster Vision, and the publicly available Onera Satellite Change Detection (OSCD) dataset.

-

Introducing Raster Vision 0.13

This release presents a major jump in Raster Vision’s power and flexibility. The newly added features allow for finer control of the model training as well as greater flexibility in ingesting data.

-

The Business Case for Raster Vision

Raster Vision is the interface between the fields of earth observation and deep learning, making it easier to apply novel computer vision techniques to geospatial imagery of all types. Joe lays out how it can be implemented in your organization and give you a competitive advantage.

-

Introducing Raster Vision 0.12

We refactored the Raster Vision codebase from the ground up to make it simpler, more consistent, and more flexible. Check out Raster Vision 0.12.

-

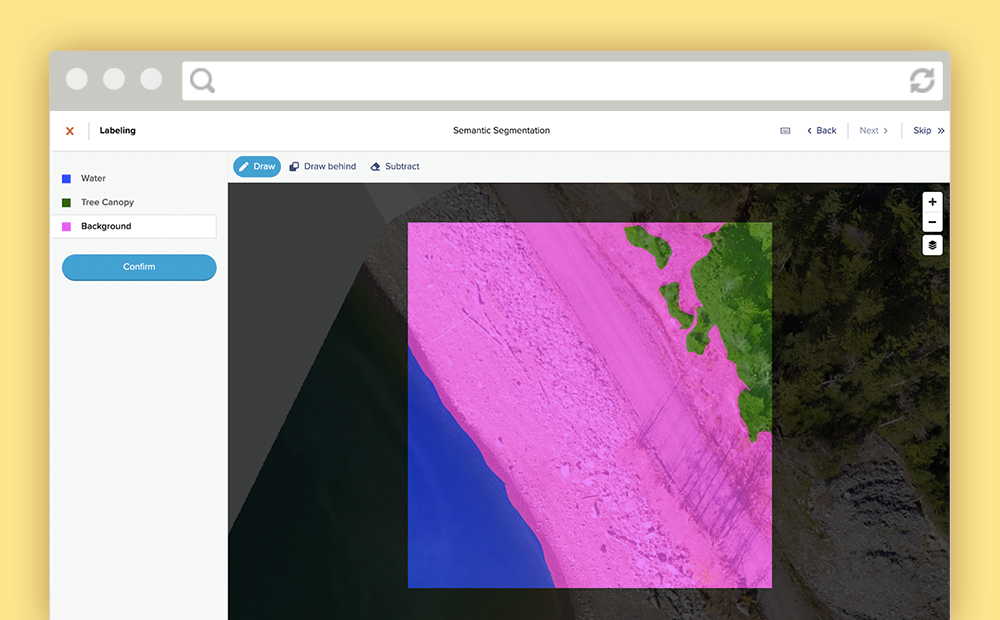

Labeling Satellite Imagery for Machine Learning

Definitions and visuals of the most common ways to label satellite imagery for machine learning. Includes tips for managing annotations for each type.

-

Join the OpenCities AI Challenge and Detect Building Footprints from Aerial Imagery

We partnered with Driven Data and the World Bank to develop the Open Cities AI Challenge. This challenge uses machine learning to extract building footprints in unmapped areas to promote disaster resilience.