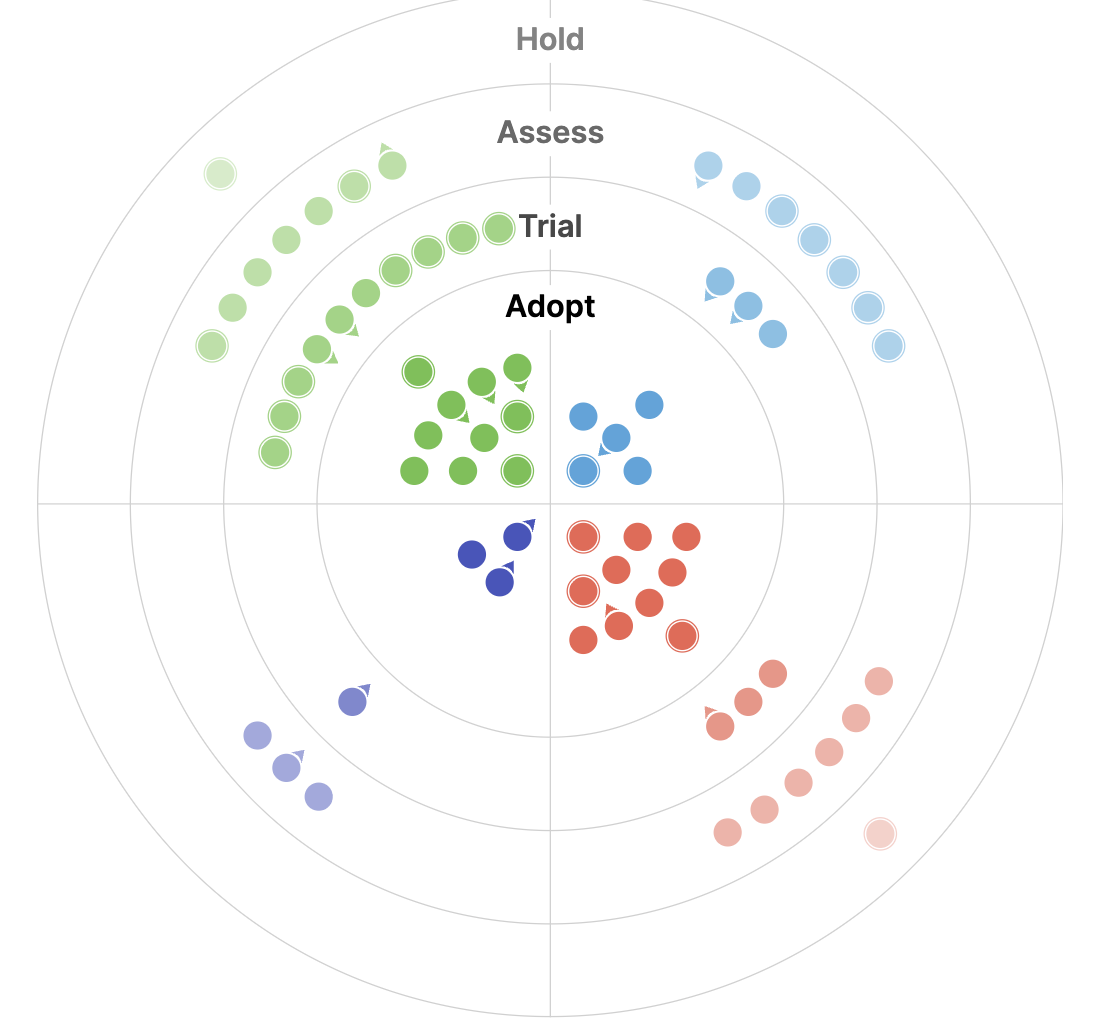

For the third year running, we’re introducing a new and updated version of our tech radar this Fall. This year’s radar maintains last year’s attention toward developments in AI, and reflects several key themes that we describe in more detail in the remainder of this blog including MCP and Agentic AI, EO foundation models and embeddings, and the continued evolution of Cloud Native Geospatial.

Internally, the tech radar represents a key opportunity for members across the Element 84 team to share new tools and trends they’ve noticed throughout the year with their peers – all while picking up techniques and unlocking new strategies for their own project work.

By design, the tech radar becomes an even more collaborative resource with each annual evolution. In this year’s edition we continued to consider external submissions from the community, and we encouraged open conversation about the radar during internal Community of Practice meetings throughout the past year. As always, the tech radar is a living and evolving beast, and we look forward to hearing your thoughts about our findings and reflections, as well as new ideas for future editions!

Beyond chat: agentic AI and MCP

In 2025, AI agents are mainstream and continue to transform how people interact with technology. In software development, these agents are capable of understanding entire repositories, tracking dependencies, and planning multi-step development tasks with minimal human supervision. Beyond coding, they reason with relevant context, maintain goals over time, and take meaningful actions across tools and workflows. Their growing ability to operate on larger context windows and adapt to complex objectives marks a shift from conversational AI to autonomous, purpose-driven systems that extend previous chat capabilities and put AI Agents and AI Coding Assistants squarely in Adopt.

At the core of this transformation is the Model Context Protocol (MCP), a new standard that allows AI systems to connect with external data and tools efficiently. MCP gives LLMs a standardized way to interact with tools, enabling them to retrieve live context, call APIs, and interact with complex environments. This is a marked advancement from last year, where we identified LLM/chat integrations as a main tech radar highlight. Last year, we highlighted a growing list of LLMs. This year, there is an ever-growing list of MCP servers, highlighted by MCP and FastMCP debuting in Adopt.

EO foundation models and embeddings

In the geo-specific AI space, the number of Earth Observation (EO) foundation models and publicly available EO vector embedding datasets continues to increase. This year, efforts from the community prioritized handling multiple sensor modalities and resolutions, along with text and geolocation information, in an effort to make these models truly sensor-agnostic. This trend is a continuation of an emerging development noted in last year’s radar. In a significant step toward different modalities, the Prithvi WxC model was introduced as a foundation model specifically for weather and climate data.

A major recent development is the emergence of models like TESSERA and AlphaEarth, and their accompanying embeddings datasets, which produce pixel-level embeddings. This has introduced the notion of “embedding fields.” Unlike patch-level embeddings, these pixel-level embeddings focus on compressing temporal information across a time series of images rather than spatial information across pixels. Their increased granularity gives them an advantage in dense prediction tasks, such as segmentation. We’ve placed this technology in the Assess category this year, coinciding with the shift of Geospatial Vector Embeddings to the Trial category.

Academia and industry alike have made a strong push toward standardizing, distributing, and benchmarking EO embeddings. The Embed2Scale project and the associated Earth2Vec community are notable collaborative attempts toward this effort. Our own contribution to this space was the release of a white paper advocating for an EO embedding catalog, as we have been enthusiastic about the potential of EO vector embeddings for some time and will continue to closely monitor developments.

Cloud-native geo

While the core components of the cloud-native geospatial stack have been production-ready for a few years, 2025 is the year the community truly hit its stride. The inaugural Cloud-Native Geospatial Conference in Utah exemplified this maturity, shifting the conversation from “what if” to “what’s next.” The standard toolbox for data delivery is now clear: PMTiles moved from Trial to Adopt, joining established formats like GeoParquet, COG, STAC, COPC, and the essential tile server TiTiler. These formats are typically served from foundational data sources like the massive Earth on AWS repository, which graduated from Trial to Adopt, and the Planetary Computer, which is back from Hold to Assess with its new Pro preview.

With foundational formats and data sources established, attention has shifted to how we work with the data. This year, Polars debuts in Adopt, offering a massively performant replacement for Pandas. It joins DuckDB, which remains a strong Trial candidate for analytics, and Dask, which continues as a reliable workhorse for scaling out. odc-stac also enters Adopt, bridging STAC metadata with processing by enabling the dynamic creation of XArrays from STAC Items.This maturity also enables the tackling of more specialized problems, such as selecting the correct spatial index. S2 joins H3 in the Adopt ring this year, framing the conversation around a practical trade-off: H3’s friendly hexagons for clean visuals versus S2’s strict, precise hierarchy for situations requiring absolute certainty of a point’s containment within a box.

In the midst of these changes, the world of array data presents some complexity. While the adoption of Zarr v3 gives the format real capability, and we placed new tools like Virtual Zarr stores and Icechunk in Assess as methods to bring cloud-native access to legacy formats, the GeoZarr specification is completely gridlocked, earning it a spot in Hold. This situation means pragmatic workarounds are necessary, contrasting with the stability seen in other areas of the ecosystem.

A tighter radar

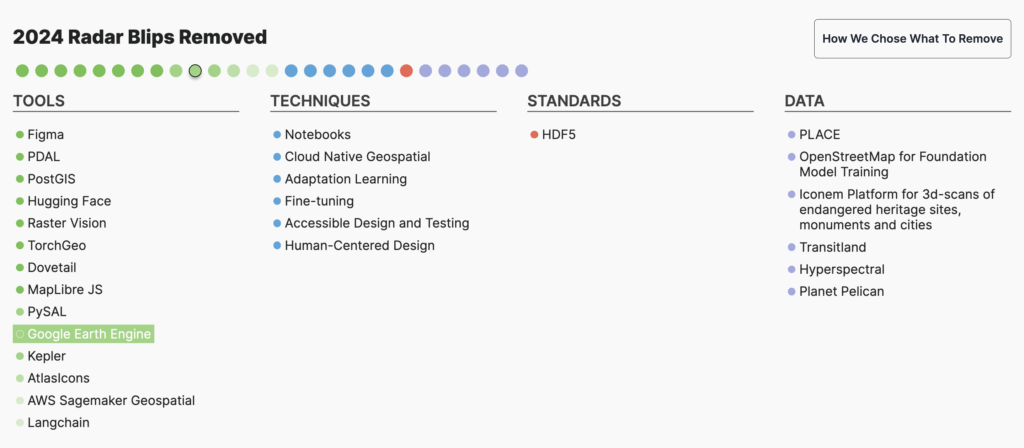

From its beginning, our tech radar was intended to be a prioritization map, not an encyclopedia, a guide to what we at Element 84 are paying attention to now. As the third edition of this radar is published, the mission is sharpened by actively pruning the list to increase focus.

Broad, evergreen practices like Human-Centered Design and well-established tools like Figma, Hugging Face, and PostGIS have been removed. The concept of “Cloud-Native Geospatial” has also been removed as its own category, as it is now so foundational that it is simply the default operational context.

These items are the bedrock of our work, but they are no longer in flux. The radar’s primary job is to map what is in motion: the formats, engines, and standards that require a decision, an experiment, or close observation this year. By trimming what is stable, we maintain the original goal: to provide a clear, opinionated guide to the technologies actively shaping our future.

The future of the Geospatial Tech Radar

The tech radar continues to be an important way for us to reflect on the tools that are making an impact on our work and to share industry developments both internally and with our community. As we reach our third iteration, it’s been particularly fascinating to observe the evolution of industry trends through the years. Although the tech radar will always reflect the position of Element 84 specifically, we appreciate the opportunity to incorporate new blips that we may not have otherwise considered.

In the third edition of the tech radar, we integrated suggestions from members across the public and private sectors to ensure that we are continuing to prioritize items that are relevant for the community as a whole. If you’re interested in contributing to future editions of the radar, or if you have feedback or thoughts about this one, we’re all ears! Send us a message with your ideas, or stay tuned for an open submission form as we get closer to developing the next radar.