For the second year in a row, I spent the week after Thanksgiving in Las Vegas, immersed in AWS re:Invent. Last year’s conference left me energized and inspired— geospatial AI was just beginning to emerge as a transformative force. This year, as anticipated, AI took center stage, with a wealth of sessions dedicated to its advancements. While AI was a dominant theme, I also attended a variety of sessions covering other critical areas of cloud technology. In this post, I’ll share key insights and trends from AWS re:Invent 2024, highlight what stood out compared to 2023, and explore how these developments are shaping both our work at Element 84 and the future of geospatial software solutions.

AI: getting bigger and bigger…

AI models continue to grow in scale, with no signs of slowing down. Tech companies are investing in advanced infrastructure, including nuclear power, to meet the energy demands of increasingly powerful models like OpenAI’s o1. In our recent blog post on sustainable AI, Jason Gilman and I explored this issue and strategies to make AI adoption more environmentally friendly, cost-effective, and widely accessible. The scaling of AI was further underscored at re:Invent with the announcement of Trainium2 ultraservers, which connect 64 Trainium2 chips to support larger models with more parameters. Alongside this, Amazon unveiled Project Rainier, a collaboration with Anthropic to build a massive compute cluster designed for training the next generation of AI models. Once completed, this cluster will rank among the largest supercomputers in the world, highlighting the extraordinary physical and computational resources required to advance AI to its next frontier.

…While also getting smaller and cheaper

Training large foundation models—and even running them—can often be cost-prohibitive and inaccessible to many organizations. Several of Amazon’s announcements at AWS re:Invent reinforced the importance of prioritizing sustainable AI, and we look forward to continuing to advocate for further sustainability as the field evolves.

Prompt caching, a feature recently introduced by OpenAI and Anthropic, is one sustainable AI approach we discussed in our related blog. Through minimizing redundant computations, prompt caching is designed to reduce energy consumption and costs. Amazon Bedrock has now incorporated prompt caching as well, claiming up to 90% savings in cost and reduced latency—a testament to the industry’s focus on efficiency.

Another standout announcement at re:Invent was the introduction of the Amazon Nova series of models. While these are large foundation models, Nova offers two cost-effective options: Amazon Nova Micro (text-based) and Amazon Nova Light (multi-modal). Additionally, Amazon showcased model distillation as a fine-tuning technique. This method allows larger pre-trained models to be distilled into smaller, more efficient versions tailored to specific use cases.

Keeping customer costs down while developing impactful AI solutions in a sustainable way is a primary concern for Element 84 as we head into 2025 and it is exciting to see developments on that front. Increased smaller model options as well as enhanced features in managed services will help us achieve those goals.

LLM usage has become prolific

In 2023, much of the AI focus centered on integrating natural language capabilities into application user experiences using Large Language Models (LLMs). Techniques like Retrieval Augmented Generation (RAG), vector embeddings, and prompt engineering dominated the conversation. Fast-forward to 2024, and the landscape has evolved significantly. With the introduction of new models, features, and techniques, it’s clear that LLM usage has become not only prolific but also more accessible and feature-rich.

One notable development is Amazon Guardrails, which addresses common challenges and concerns in deploying LLMs. This suite of functionality tackles issues such as hallucinations, the exposure of sensitive information or Personally Identifiable Information (PII), and the generation of harmful content. As emphasized in the responsible AI sessions, Generative AI introduces unique risks compared to traditional machine learning, which relies on curated training data and produces more contained outputs like classifications.

One of the most significant risks for companies, including ours, while using Generative AI is the potential to expose sensitive data, whether it’s proprietary business information or user data. Based on my experience in the Guardrails workshop, the tool offers robust functionality to mitigate these risks.

Hallucinations remain a well-documented issue for LLMs, eroding trust at best and posing serious risks to organizations at worst. Guardrails appears to address this with Automated Reasoning checks, which provide error validation. While still in preview, this feature shows promise, although I didn’t have the chance to experiment with it during the workshop. It’s an area I’m eager to explore further.

While no solution is entirely foolproof, the key takeaway here is the significant investment being made to provide tooling that enhances the security and usability of LLMs. These advancements underscore the critical conversations we must continue having as Generative AI matures and integrates further into our workflows.

Developments in Geospatial AI

As AI advances, so does the potential for Geospatial AI to address critical challenges facing the planet, particularly in the fight against climate change. For example, SatSure demonstrated how they use AI during India’s monsoon season to enhance cloudy satellite imagery, enabling better-informed decisions about allocating agricultural funding. Meanwhile, ICEYE highlighted the alarming frequency of disasters, noting over 24 “billion-dollar disasters” in 2024 alone. They showcased solutions for delivering actionable data before, during, and after such events. It’s inspiring to see companies leveraging AI in innovative ways to mitigate the effects of climate change and drive impactful solutions.

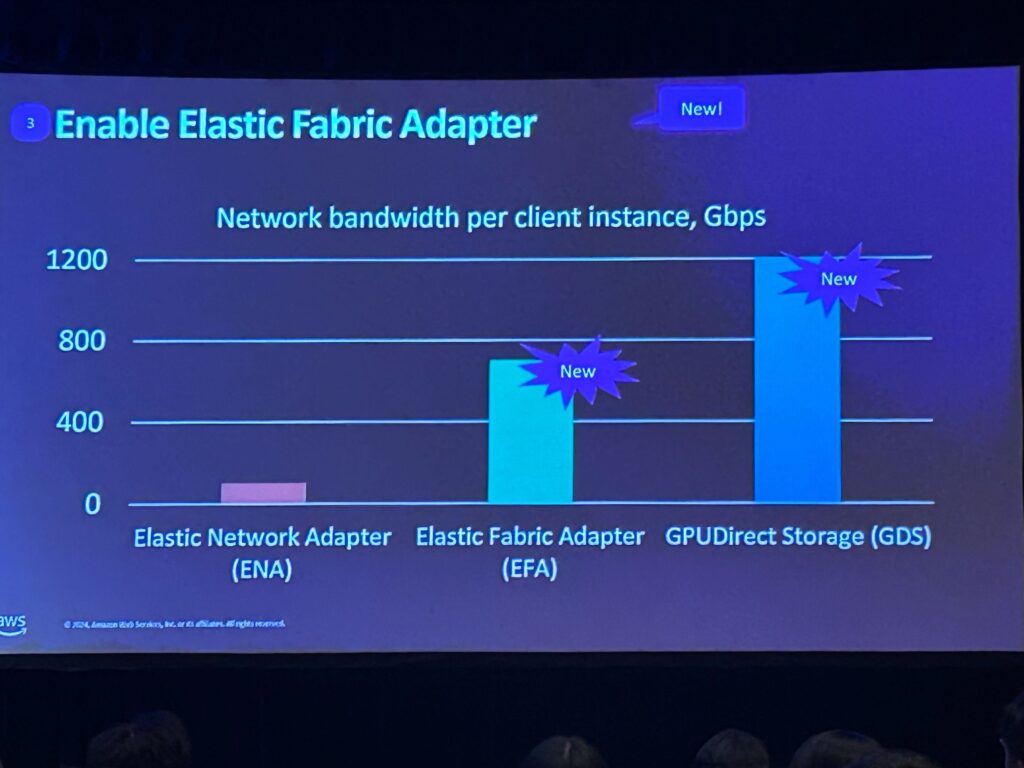

I also attended several sessions on High-Performance Computing (HPC) developments, which relate directly to my work with NOAA EPIC on Numerical Weather Prediction (NWP). HPC systems are increasingly being utilized for AI, underscoring ongoing investment in this area. One of the sessions I was in predicted HPC cloud revenue is expected to exceed $20B by 2027. FSx for Lustre was highlighted as the fastest-performing storage solution for GPU instances, now even faster thanks to its integration with Elastic Fabric Adapter (EFA) for bypassing the operating system and GPUDirect Storage (GDS) for direct GPU access. These storage innovations will not only accelerate AI model training but also enable faster weather forecasting.

Another noteworthy session explored the new AWS Parallel Computing Service (PCS), a managed service for building and maintaining parallel computing clusters. Previously, this process relied on manual setup using AWS ParallelCluster. With NOAA actively working to make its applications more accessible to students and community members, these cloud advancements help lower barriers to entry by reducing reliance on traditional HPC infrastructure. These improvements will make weather prediction tools more accessible, empowering a broader audience to contribute to improved forecasting.

AI advancements still on the horizon

As AI continues to advance at an unprecedented pace, governance inevitably lags behind. Security must remain a top priority to safeguard our data, privacy, and systems. Bedrock Guardrails represents a promising step forward, and I’m eager to see how they can enhance confidence in implementing AI securely. Responsible AI is an area that resonates deeply with me. I attended several sessions on the topic, and as the implications of AI unfold, I believe it warrants its own dedicated blog post – stay tuned for more coming soon!

Looking ahead, agentic architecture is the next frontier to explore. By AWS re:Invent 2025, I expect to see expanded use cases for AI agents—not just retrieving answers but performing actions autonomously. At a workshop on Bedrock Agents, I had the chance to see this in action (albeit with a few hiccups). While this evolution is exciting, it also introduces a range of security and confidence challenges as we grant AI access to backend systems. Based on my experience so far, these risks are being thoughtfully considered. Bedrock Agents, combined with Guardrails, seem well-positioned to detect threats and mitigate harmful actions, offering a solid foundation for secure, actionable AI.

AWS re:Invent 2024 showcased the incredible strides being made across AI, geospatial technologies, HPC, and cloud computing, all of which are driving innovation and solving some of the world’s most pressing challenges. It’s particularly inspiring to see how these technologies are being harnessed to combat climate change and make data-driven decisions that positively impact communities worldwide. As we look ahead, the continued focus on responsible AI, scalable infrastructure, and collaborative innovation will be critical to ensuring these advancements benefit not just industries, but society as a whole. I’m excited to see how these developments unfold and how we can leverage them to push the boundaries of what’s possible in geospatial AI and beyond.

Want to keep your finger on the pulse of geospatial AI?

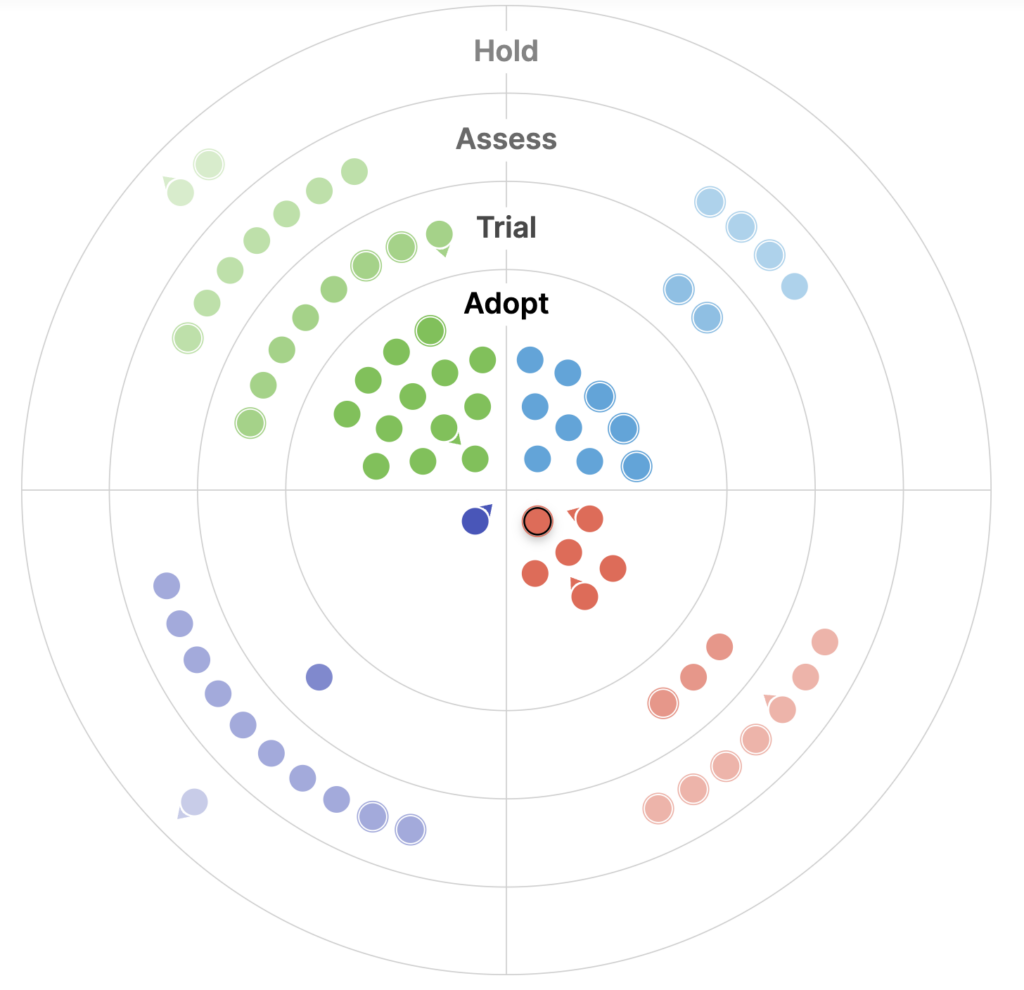

For more of our thoughts on geospatial AI trends, our Geospatial Technology Radar is a good resource to keep an eye on. We source content for the radar each year both within Element 84 and across the geospatial, open source, and earth observation communities. In our 2024 radar, we covered several topics that were discussed heavily at this year’s re:Invent including the rise in popularity of the Parquet file format, which we expect to extend to GeoParquet. The most recent addition of our tech radar highlighted (among many other topics) exciting developments in Parquet and GeoParquet as their adoption expands – paving the way for enhanced GeoParquet functionality and performance, further solidifying their role in the industry.

To read more insights from our 2024 Tech Radar, check out the blog post we put together summarizing the salient trends we noticed. We’ve already started thinking about the 2025 edition of the radar, and like last year we’ll be accepting community submissions. Stay tuned for submission details and the eventual launch of the latest version! What should we spotlight this year?