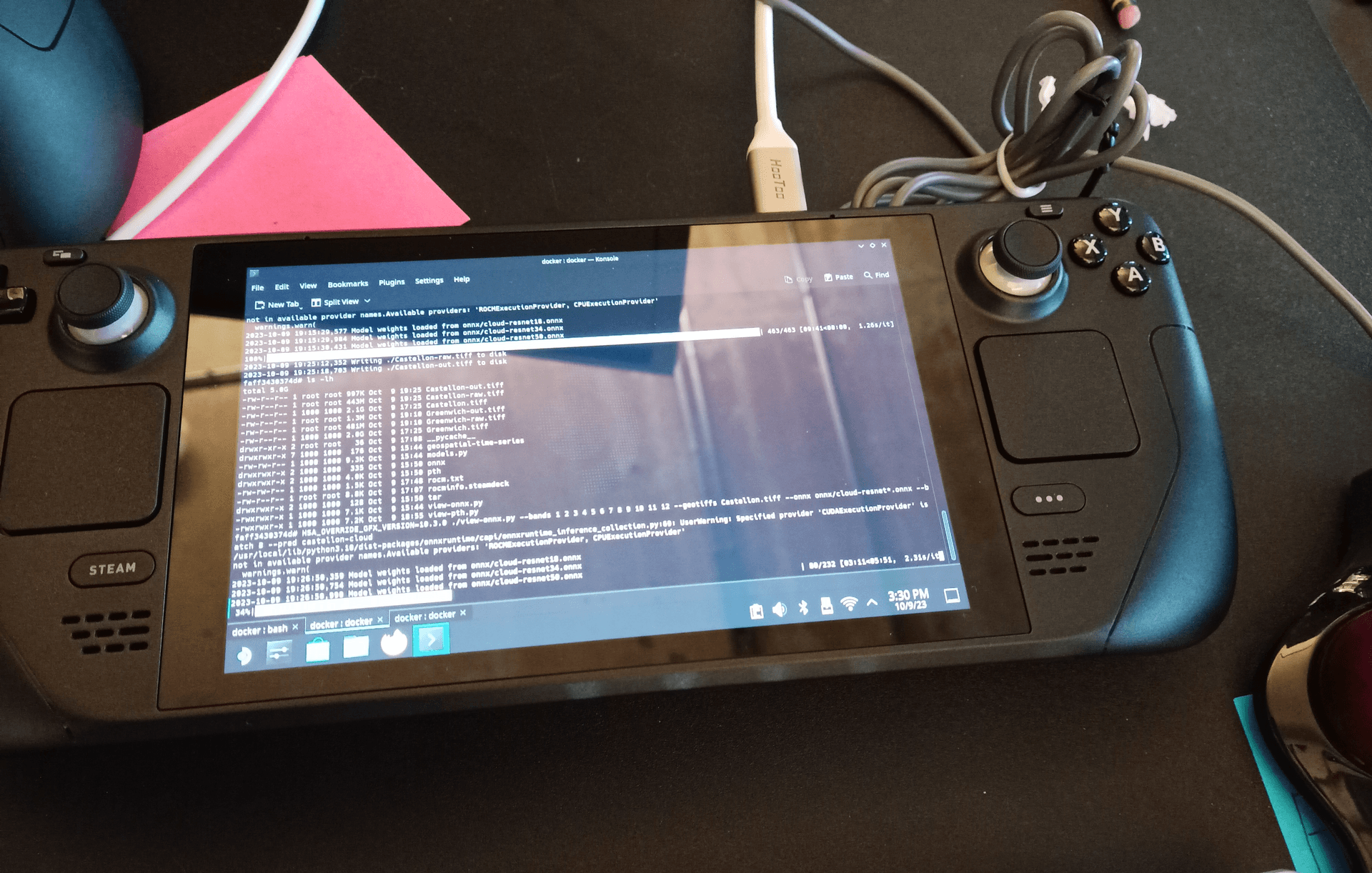

In the world of portable gaming, the SteamDeck has made a notable impact not just as a gaming device, but also as a versatile Linux machine. Leveraging this versatility, enthusiasts and developers alike are finding innovative ways to utilize this handheld powerhouse beyond its primary gaming functionality. In this blog post, we’ll guide you through setting up ROCm 5.4.2, ONNX, and PyTorch on a SteamDeck.

Table of Contents

Prepare the Filesystem and the Package Manager

If you have not already done so, please give the user `deck` a password. This can be done by typing the following into a terminal when logged in as the `deck` user:

passwdThat user now has a password, which is needed for running subsequent `sudo` commands.

Next, we need to make the SteamDeck’s operating system mutable, as it is read-only by default.

sudo steamos-readonly disable

We are now ready to initialize the package manager and install packages. If you have not already initialized the package manager, type the following to do so.

sudo pacman-key --init

sudo pacman-key --populate archlinuxNow update the package manager.

sudo pacman -SyuSetup the Docker Environment

Docker will be the primary tool for running the various containers needed for our setup.

First, we must get docker and other necessary components installed. That can be done by typing the following.

sudo pacman -Syu runc

sudo pacman -Syu containerd

sudo pacman -Syu dockerNow, add the `deck` user to the `docker` group so that you can run docker commands without having to preface them with `sudo`.

sudo usermod -a -G docker deckNow enable the necessary services.

sudo systemctl enable containerd.service

sudo systemctl enable docker.serviceFinish the Docker Installation

Verify that containerd and dockerd are functioning by typing the following.

sudo systemctl is-enabled containerd.service

sudo systemcl is-enabled docker.serviceIf those commands respond positively, you can now render the system “read-only” again.

sudo steamos-readonly enableRunning the ONNX Container with ROCm Support

With Docker set up, you can now pull and run the ONNX runtime with ROCm support.

docker run -it \

--device=/dev/kfd --device=/dev/dri \

--ipc=host \

--cap-add=SYS_PTRACE \

--security-opt seccomp=unconfined \

--group-add video \

jamesmcclain/onnxruntime-rocm:rocm5.7-ubuntu22.04The command above does the following:

- Provides access to GPU devices

- Allows the container to use the IPC namespace of the host

- Grants SYS_PTRACE capability

- Disables the default seccomp profile for the container

- Adds the container to the ‘video’ group, providing access to GPU devices

- Runs the image

`jamesmcclain/onnxruntime-rocm:rocm5.4.2-ubuntu22.04`

You may also wish to try the image `jamesmcclain/pytorch-rocm:rocm5.4.2-ubuntu22.04` which provides ROCm-accelerated inference and training for PyTorch.

Inside the container, try typing `rocm-smi` and `rocminfo` to verify that the GPU is visible from within the container and that the ROCm runtime is able to communicate with it.

If you carefully inspect the output of the `rocminfo` command, you will notice that the GPU device in the SteamDeck is a `gfx1033` device. It transpires that ROCm 5.4.2 does not have direct support for this device, but does have support for the closely related `gfx1030` device. For this reason, you will need to have `HSA_OVERRIDE_GFX_VERSION=10.3.0` in the environment whenever you run ROCm code (you can either export it, add it to a `Dockerfile`, or simply preface commands with it).

Increase VRAM

With docker installed and the containers working, you may find it beneficial to increase the amount of RAM available to the SteamDeck’s GPU. That can be done as follows.

- The SteamDeck should initially be powered off

- Hold the volume up button while holding the power button

- Keep holding those buttons until the SteamDeck boots up, after a short wait you should hear a tone

- In the bios menu select the Setup Utility.

- Select the advanced settings.

- Scroll to the UMA frame buffer.

- Select and switch from 1GB to 4GB.

An Example Workflow

It should now be possible to run a basic workflow. From within the PyTorch container discussed above, we recommend the mnist example that is given in the ROCm documentation.

Packaging Your Workload

The GitHub repository https://github.com/jamesmcclain/onnxruntime-rocm-build contains the Dockerfiles from which the two above-mentioned docker images were built. The repository contains two Dockerfiles that allow users to build and run workloads using ROCm 5.4.2 for both ONNX Runtime and for PyTorch, respectively.

ONNX Runtime with ROCm 5.4.2

The file `Dockerfile` in the repository can be understood as follows.

The Dockerfile begins with the `ubuntu:22.04` base image. It sets up the ROCm and AMDGPU repositories, installs the necessary dependencies, and also installs Python packages essential for our work. The second stage clones the ONNX Runtime repository, checks out the specific commit we want, and builds it. The third stage in the Dockerfile installs the freshly compiled ONNX Runtime package while eschewing any detritus that was created during the process of building the runtime in stage two.

To build the ONNX Runtime Docker image, navigate to the directory containing the Dockerfile and run the following command.

DOCKER_BUILDKIT=1 docker build --build-arg ROCM_VERSION=5.4.2 --build-arg AMDGPU_VERSION=5.4.2 --build-arg BUILDKIT_INLINE_CACHE=1 -f Dockerfile -t jamesmcclain:onnx-runtime-rocm5.4.2 .This command will create a Docker image tagged `jamesmcclain:onnx-runtime-rocm5.4.2` that is substantially identical to the identically-named image on DockerHub.

PyTorch with ROCm 5.4.2

The file `Dockerfile.pytorch-rocm5.4.2` in the GitHub repository can be understood as follows.

This Dockerfile reuses the first stage of the image we built in the previous step (`onnxruntime-rocm-stage1-rocm5.4.2`) as its starting point. In order to tag stage one from the previous image so that it is usable as a base image in a separate Dockerfile, run the following command.

DOCKER_BUILDKIT=1 docker build --cache-from jamesmcclain/onnxruntime-rocm:rocm5.4.2-ubuntu22.04 --build-arg ROCM_VERSION=5.4.2 --build-arg AMDGPU_VERSION=5.4.2 --build-arg BUILDKIT_INLINE_CACHE=1 -f Dockerfile --target stage1 -t onnxruntime-rocm-stage1-rocm5.4.2 .Using `onnxruntime-rocm-stage1-rocm5.4.2` as a base image, PyTorch and its related libraries are then installed using pip and the specific ROCm version.

To build the PyTorch Docker image, navigate to the directory containing the `Dockerfile.pytorch-rocm5.4.2`. First, run the command given above to build the stage one image from the ONNX image. After you have done that, run the following command.

DOCKER_BUILDKIT=1 docker build --build-arg BUILDKIT_INLINE_CACHE=1 -f Dockerfile.pytorch-rocm5.4.2 -t jamesmcclain:pytorch-rocm5.4.2 .This will create a Docker image tagged `jamesmcclain:pytorch-rocm5.4.2` that is substantially identical to the identically-named image on DockerHub.

Your Code

Now that you have these Docker images, you can use them as base images to build your own projects. By starting from these images, you’re ensuring that your projects will run in an environment that’s set up for ROCm 5.4.2, with either ONNX Runtime or PyTorch pre-installed. Simply reference them in the `FROM` command of your project’s Dockerfile.

For instance:

FROM jamesmcclain:onnx-runtime-rocm5.4.2

...

Your project's Docker instructions

...If you need deeper customization, you can use the Dockerfiles found in the GitHub repository to make your own custom images.

By leveraging these Dockerfiles and the Docker images they produce, you can ensure consistent, reproducible, and portable execution of your machine learning workloads that rely on ONNX Runtime or PyTorch with ROCm support.

Conclusion

Congratulations! You now have a SteamDeck ready to harness the power of ROCm, ONNX, and PyTorch. This setup enables you to run deep learning models directly on your SteamDeck, showcasing the device’s versatility beyond gaming. Dive into the world of machine learning on the go with your SteamDeck! Recently, our team implemented this technology to streamline search-and-rescue operations using machine learning. Check it out, and let us know what you think.