I. The Revolution Wasn’t Televised

The way we access and analyze geospatial data has shifted so fundamentally that it’s easy to lose perspective on what things were like before. Less than a decade ago, accessing and analyzing geospatial data was a fractured affair; a hard-to-navigate landscape of bespoke APIs and custom tooling. Every new dataset, whether from a government agency or a commercial provider, was its own puzzle. You couldn’t just find the data; you had to reverse-engineer the means to use it. Today, nearly eight years after the first STAC sprint, we have an interoperable ecosystem built around a common language for describing geospatial information: the SpatioTemporal Asset Catalog (STAC).

Why did STAC succeed where other standardization efforts have stumbled? It wasn’t luck. It was a combination of two key forces: a set of pragmatic structural principles and a developer-focused community culture that stress-tested every assumption against new and challenging datasets. This combination followed a pattern that has come to be known as the Guerilla Standards Playbook.

It’s been a couple of years since I published Part 1, which covered the origin story of STAC. In that piece, we explored the what—the history and the initial sprints that created the spec. Now, it’s time to cover the how and the why of its success.

II. The Guerilla Standards Playbook: Specification vs. Standard

Looking back, STAC’s success came from an approach we can now identify as the “Guerilla Standards Playbook,” a term coined by Howard Butler. It followed in the footsteps of earlier community-driven efforts like GeoJSON and Cloud Optimized GeoTIFF (COG). We didn’t have a formal name for it then – the nature of an informal group representing industry and government to build something useful meant we were creating a Specification first, not a traditional Standard.

This “playbook” wasn’t a formal doctrine. It’s a term that has been more recently articulated to describe the agile and effective patterns that emerged from collaborative efforts like STAC, and its name perfectly captures the spirit of the approach. It distinguishes between two fundamentally different models:

- Formal Standards: Developed by official standards bodies (like the Open Geospatial Consortium, or OGC, and the IEEE), they are historically slower, involve rigorous review, and are focused on top-down consensus. They are built to last, but the process can be lengthy.

- Guerilla Specifications (STAC’s Path): Developed by a community of practitioners, they are fast, driven by implementation needs, minimal by design, and focused on bottom-up adoption. They earn their authority through utility, not decree.

| Attribute | Formal Standards | Guerilla Specifications |

| Developed By | Official standards bodies | Community of practitioners |

| Process | Slow, rigorous, top-down consensus | Fast, iterative, implementation-driven |

| Focus | Long-term stability, correctness | Immediate utility, adoption |

| Authority | Earned by formal decree | Earned by utility and community buy-in |

| Goal | A lasting, authoritative standard | A minimal, useful specification |

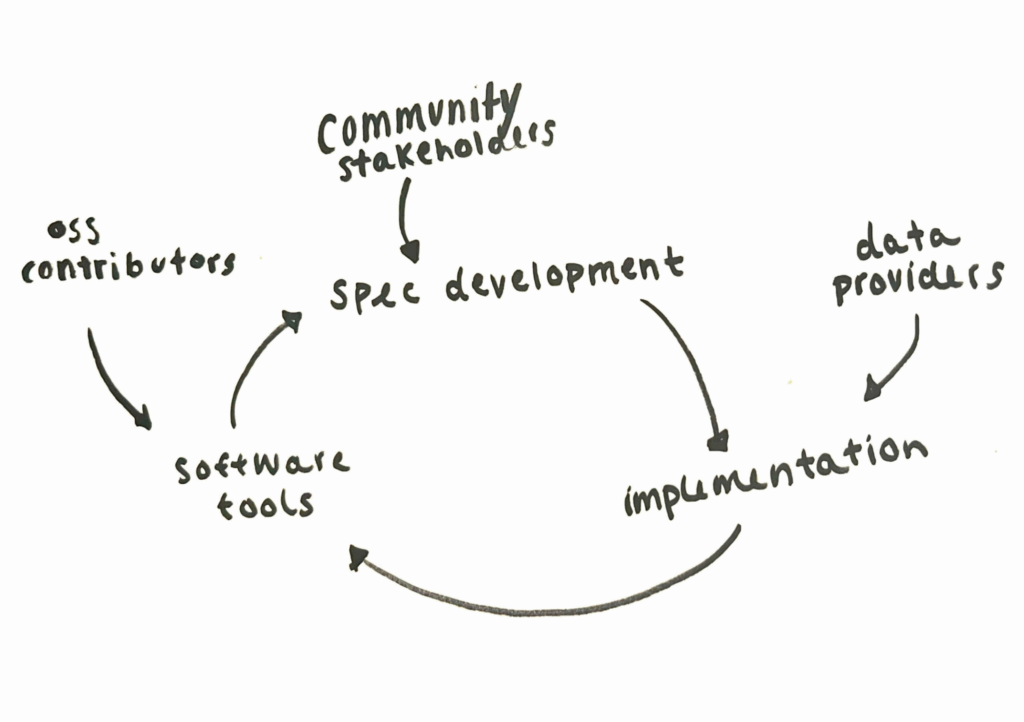

A core reason this playbook works is that it actively avoids a common trap in open source and open standards work: the “Field of Dreams” fallacy. It’s the belief that “if you build it, they will come”. Although attractive, technical merit alone is never enough to guarantee adoption. Users and developers are busy; they adopt solutions that solve their immediate problems with the lowest possible friction. Without a community actively demonstrating value, providing support, and lowering the barrier to entry, even the most elegant specification will be ignored. Success requires relentless community engagement and building with people, not just for them. When people have a chance to be heard, they feel ownership over the end result and are more likely to become champions who drive adoption. This creates a continuous feedback loop between the specification, the software built to use it, and the community providing feedback. Implementation isn’t just a product of the spec; it’s an input that actively informs and validates it. This is the “Cycle of Development” that powered STAC from its first sprint to its 1.0 release and beyond.

III. Structural Pillars

STAC’s design was driven by implementation, not theory. Every component was chosen for its utility in solving real-world problems.

Standing on the Shoulders of Giants

A key to our agility was leveraging established standards. We didn’t reinvent the wheel. STAC is built on JSON and, for all spatial extents, GeoJSON. These were the obvious, established choices in 2017 that immediately lowered the barrier to entry for developers.

Perhaps most importantly, we found a new way to collaborate with formal standards bodies. A crucial part of our strategy was aligning with OGC API – Features (OAF). OAF itself was not developed using a typical standards process. The OGC, in developing its new generation of API standards, was already adopting a more agile, sprint-based approach. This allowed our community to work with them as peers in real-time. We held our second and fifth sprints jointly with the OAF group, a parallel effort that allowed us to influence and align with a formal standard while ensuring our developer-centric needs were met. At the time of writing, this collaboration has allowed for STAC to become an officially accepted OGC community standard.

A Focus on Usability and Tooling

While the ultimate goal was always to serve the end-user—the scientist, the analyst, the decision-maker—we knew the path to that goal was through a healthy ecosystem of software. From day one, the focus was on creating catalogs and tools that were not just compliant, but genuinely useful. We had a working server at the end of the first sprint. While it bears little resemblance to what exists today, its existence was proof of the iterative approach. Building implementations wasn’t just about encouraging users; it was about informing the spec itself by stress-testing our assumptions with working code.

A critical example of this philosophy was the creation of the validation engine, STACLint, during Sprint #3. Building a linter so early was a cultural choice. It sent a clear signal that developer experience was paramount because a good developer experience leads to more and better tools, which directly benefits users. We weren’t just writing a document; we were building a reliable, working system that people could depend on.

These structural decisions were not made in a vacuum. A technical artifact like STACLint is also a cultural statement. The “what” we built was the direct product of “how” we operated as a community—which is the other half of the story.

IV. Community Lessons

If the structural pillars were the “what,” the community culture was the “how.” The human element was the engine for the entire cycle.

The Neutral Convener and the Rise of Champions

A successful community requires two things: a neutral entity to convene diverse interests and a core group of champions who emerge organically through sustained contribution. This is the core defense against the “Field of Dreams” fallacy. Our effort was anchored by Radiant Earth, which acted as the crucial “neutral organizing body.” They convened the sprints, provided logistical support, and successfully bridged public and private sector stakeholders who might otherwise have been competitors.

While Radiant Earth provided the venue, the project’s direction was driven by the community. In the early days, there was no formal “core team.” Influence was earned through pull requests and debates on GitHub. At the center of it all was Chris Holmes, who acted as the “benevolent dictator for life,” guiding the vision and ensuring the community stayed focused on the goal of a simple, practical specification.

The project also attracted key contributors from related efforts. Matthias Mohr, working on the openEO project, saw the natural alignment and joined after the first couple of sprints. His technical acumen and rigor were perfectly suited for the meticulous work of specification development. But the project’s success wasn’t driven by just a few individuals. It’s a testament to the dozens of critical developers, users, and data providers—the project’s champions—who showed up sprint after sprint to work, adopt, and do outreach.

As we iterated towards the 1.0 release, a group of persistent contributors naturally emerged as a de facto leadership team. When we finally formalized the first Steering Committee, it felt a bit like the dog who caught the car. We had reached a major milestone, but now faced a new set of questions about long-term governance and funding—a challenge we’ll explore in the next part of this retrospective.

The Adoption Wave: From Grassroots to Planetary Scale

STAC’s authority wasn’t bestowed by a single large entity; it was earned through a wave of adoption. While STAC itself is format-agnostic and can point to any underlying data file, its rise was synergistic with Cloud Optimized GeoTIFF (COG).

First, the release of Landsat Collection 2 by the USGS EROS Center provided a STAC catalog for the planet’s longest-running continuous Earth observation program. This instantly legitimized the approach for government and scientific data archives. At the same time, the work Element 84 completed with Digital Earth Africa brought the entire Sentinel-2 archive into a cloud-native STAC+COG format and added it to the AWS Public Data program. This combination created a critical mass of analysis-ready data that accelerated the ecosystem’s growth and gave the community the confidence to finalize the 1.0 release in May of 2021.

It was only after this broad, proven adoption that massive investment from efforts like the Microsoft Planetary Computer arrived. This was not the cause of STAC’s success, but the result of it. The community had already built a mature, battle-tested specification. Corporate investment became a powerful accelerant, not the initial catalyst.

The Right Amount of Bikeshedding

“Bikeshedding” is a term often used to describe a project’s tendency to focus on trivial issues while ignoring more important ones—and it’s almost always a negative thing. But I’d argue there is a right amount of bikeshedding, especially when it comes to naming things. Names are important. What we call things directly impacts clarity and usability, and spending time on what seems like pedantic detail can pay dividends in the long run.

The STAC community was therefore known for engaging in pedantic debates over what often seemed like trivial details. The philosophy was that the core specification should be as small as possible, capturing only the most essential fields. This promotes stability, ensuring that implementers wouldn’t face a constant churn of new versions requiring migration. Any additional features or community-specific needs were pushed into extensions. This principle was never more apparent than in the discussions over our core temporal field.

At the very first sprint, we spent a surprising amount of time debating what to call our core timestamp. datetime was chosen over timestamp not just for its explicit alignment with RFC 3339, but because the name itself is more descriptive: it clearly contains both a date and a time, whereas timestamp can be ambiguous. This was the right kind of bikeshedding—a level of productive pedantry that resulted in rigor and clarity from the start.

The harder debate was about how many timestamps to have. I, and others, argued for more complex options to handle time ranges natively in the core, but the final decision was to keep the core simple: a single, required datetime field representing a point in time. The very real need for time ranges was addressed by creating the start_datetime and end_datetime fields as an extension. That extension was so essential it was eventually promoted into the core specification. This story perfectly illustrates our philosophy: start minimal, and let real-world needs drive complexity through a managed, extensible process.

Radical Transparency

The “Field of Dreams” fallacy is ultimately defeated by building in the open. Every foundational decision in STAC was made in public, and the process was tested early on in the debate to define our most basic building blocks: the Catalog and the Collection.

Early on, there was significant confusion. What was the difference between a Catalog and a Collection? How did they relate to the ItemCollection returned by an API? The lines were blurry. The debate, captured across public GitHub issues (#174, #667), wasn’t theoretical. It was driven by real-world implementers. Static catalog creators had one set of needs; API developers had another. Every new use case challenged the definitions.

The community, working entirely in the open, forged a consensus. A Collection became a type of Catalog with specific, shared metadata (like extent and license) describing a coherent dataset. This clarity didn’t come from a small group in a closed room; it came from a transparent, sometimes messy, public process that forced us to address everyone’s use cases.

Conclusion: The Three Metrics of Success

The STAC formula was a risk that paid off. It was a fusion of specification speed, developer focus, and community buy-in. Ultimately, a standard’s success is measured by the only three metrics that matter:

- Adoption

- Adoption

- Adoption

Today, STAC is used by NASA, the USGS, major satellite companies, and even for data from Mars and the Moon. But perhaps the most rewarding outcome is seeing the community adopt this collaborative pattern to solve new interoperability challenges. Specifications like Cloud Optimized Point Cloud (COPC), Zarr, GeoParquet, and fiboa follow a similar playbook. It’s a call to action for everyone to find a community and build something better, together. This success belongs to the many individuals who rejected the “Field of Dreams” fallacy and chose to build with a community instead of just building for one.

So, what’s next? In the next (and likely final) part of this retrospective, we’ll dive into what’s wrong with STAC, the current pain points, and how we can continue to improve.

If you’d like to chat more about another community effort, the future of STAC, or how to implement STAC in your own work, reach out to us on our contact us page.